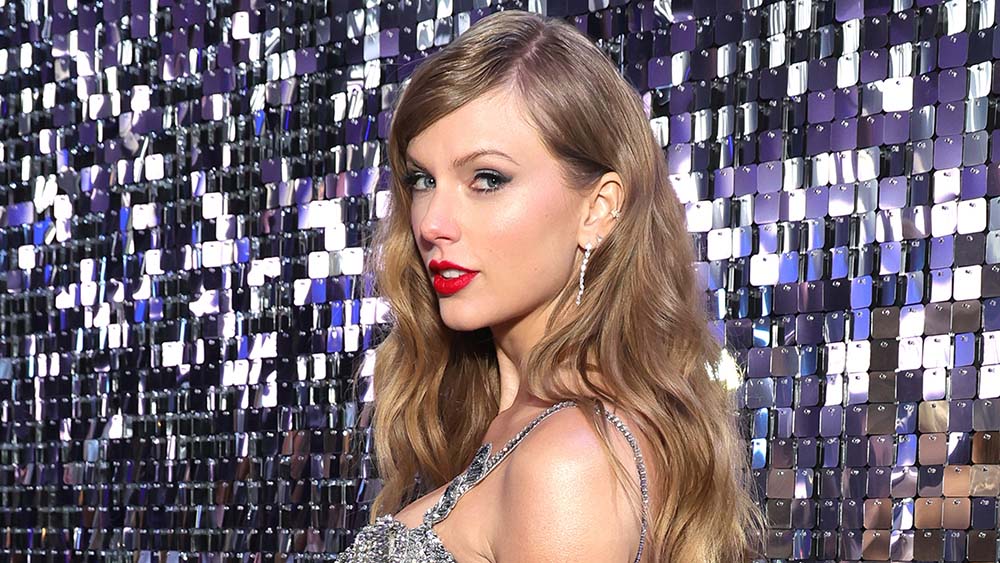

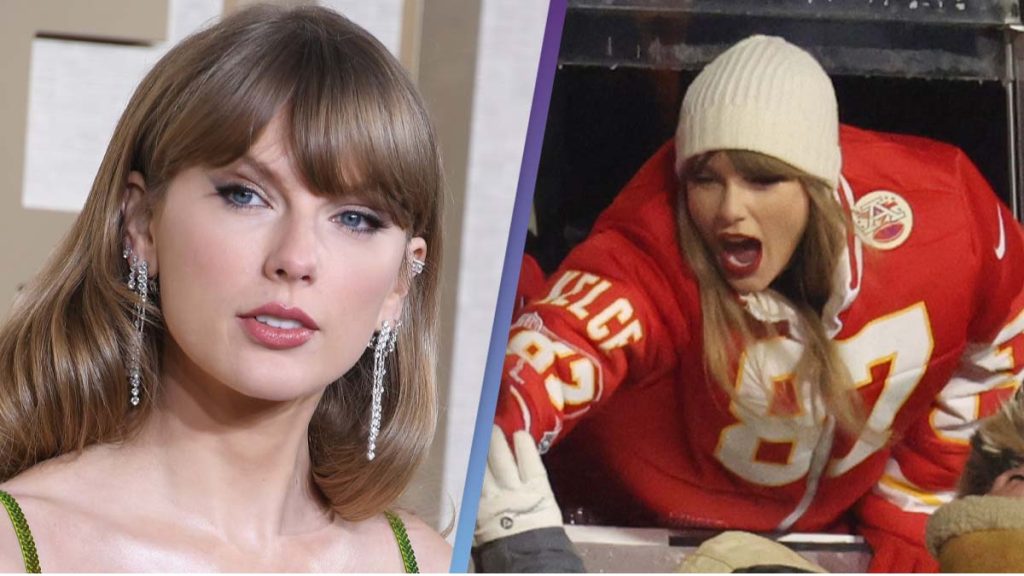

Fans React to Taylor swift Nude Deep Fake story

In a disturbing turn of events, pornographic deepfake images featuring the beloved pop icon Taylor Swift have surfaced online, triggering outrage among her fanbase and renewing concerns about the spread of malicious digital content.

The images, which depict Swift in explicit and abusive scenarios, began circulating widely on the social media platform X, according to reports. Swift’s dedicated fanbase, known as the “Swifties,” swiftly mobilized in response, launching a coordinated effort to combat the dissemination of the deepfake material. Using the hashtag #ProtectTaylorSwift, fans flooded social media platforms with positive images of the singer and reported accounts sharing the offensive content.

Reality Defender, a group specializing in detecting deepfakes, reported a significant influx of nonconsensual pornographic material featuring Swift, particularly on X. The images also made their way onto other platforms, including Meta-owned Facebook.

Mason Allen, head of growth at Reality Defender, expressed concern over the rapid spread of the deepfake images, noting that despite efforts to remove them, many had already reached millions of users.

Researchers identified numerous AI-generated images, with the most prevalent ones depicting Swift in demeaning and violent situations, often related to football themes. The proliferation of such content underscores the growing prevalence of explicit deepfakes, a trend that has intensified in recent years due to advancements in technology.

Brittany Spanos, a senior writer at Rolling Stone and an expert on Swift’s career, highlighted the swift action taken by fans in support of the singer. She noted that the issue of deepfake pornography aligns with past challenges Swift has faced, including her legal battles against abuse and harassment.

In response to inquiries about the fake images, X issued a statement reaffirming its commitment to removing nonconsensual nude images from its platform. However, concerns were raised about the platform’s diminished content moderation efforts following changes in leadership.

Meta also condemned the dissemination of the offensive content and pledged to take appropriate action to address it.

Despite efforts to mitigate the spread of deepfake material, concerns remain about the effectiveness of existing safeguards. OpenAI and Microsoft, both providers of AI technologies, acknowledged the need for improved measures to prevent the misuse of their platforms for creating harmful content.

Federal lawmakers, including Representatives Yvette D. Clarke and Joe Morelle, have renewed calls for legislation to address the proliferation of deepfake pornography. Clarke emphasized the urgent need for digital watermarking of deepfake content, while Morelle underscored the real-world impact of such material on its victims.

As the debate over deepfake regulation intensifies, Swift and her supporters continue to advocate for stronger measures to combat online abuse and protect individuals from the harmful effects of manipulated content.