The evolution of Large Language Models (LLMs) into capable, action-oriented agents has introduced a major architectural challenge: how to connect these AI systems to external tools, data, External tools and services in a scalable and secure way. The Model Context Protocol (MCP) has emerged as the definitive open-source framework to solve this, creating a universal standard for how AI agents communicate with the outside world.

This article provides a comprehensive analysis of this groundbreaking protocol, explaining its architecture, core components, security model, and its role in the broader AI ecosystem. We will break down how it solves critical integration problems and why it’s being rapidly adopted by industry leaders like Google, OpenAI, and Docker.

MCP Infographics

Checkout the following infographics on explaining what really an MCP is: MCP INFOGRAPICS

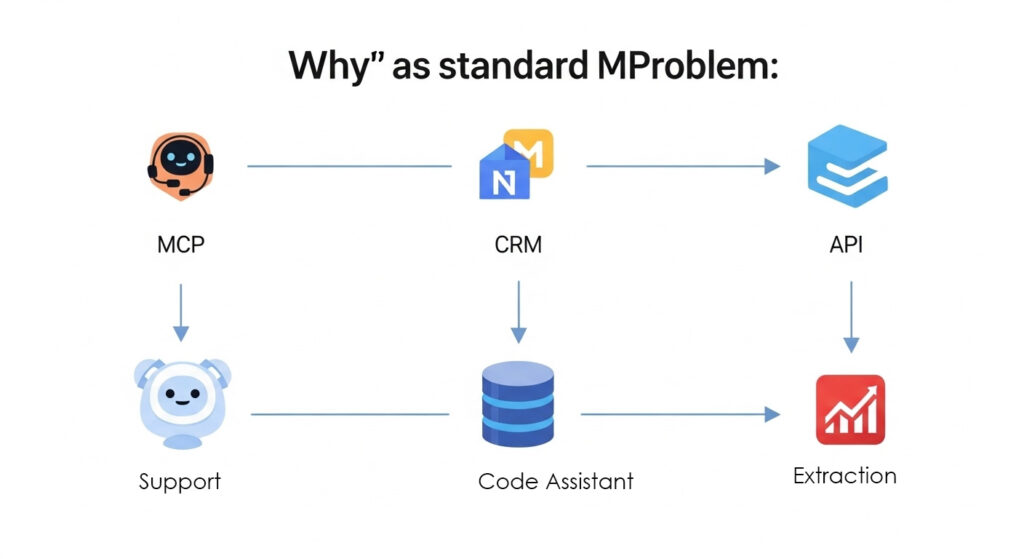

The “N×M” Problem: Why a Standard Was Necessary

Before MCP, connecting AI to external tools was a fragmented and inefficient process. Developers faced the “N×M” integration problem: connecting N different AI applications (like a support bot or a code assistant) to M different external services (a CRM, a database, an API). Without a common standard, each connection required a unique, point-to-point integration, leading to an explosion of costly and brittle custom code.

Early solutions, like OpenAI’s “function-calling,” were vendor-specific. This created “walled gardens” that locked developers into a single provider’s ecosystem. The industry needed a universal, model-agnostic standard to foster interoperability and break down these information silos. The Model Context Protocol (MCP) was created by Anthropic to be that standard.

Core Architecture: How MCP Works

MCP is often described with the powerful analogy of a “USB-C port for AI applications.” Just as USB-C allows any compliant device to connect to a vast ecosystem of peripherals, MCP provides a standard software protocol for any compliant AI model to connect with any compliant tool. This creates a “plug-and-play” environment for agentic AI.

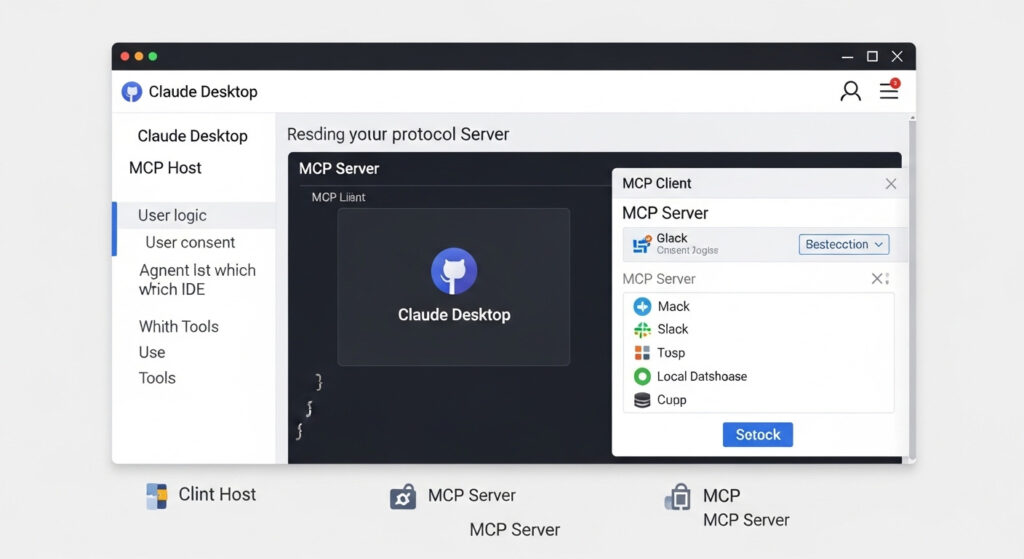

The Client-Host-Server Model

The protocol’s architecture is based on a three-participant model that ensures a clear separation of concerns and enhances security.

- MCP Host: This is the primary AI application the user interacts with (e.g., Claude Desktop, an AI-powered IDE). The Host contains the agent’s main logic, manages user consent, and orchestrates which tools to use.

- MCP Client: Residing within the Host, each Client manages a dedicated, one-to-one connection with a single MCP Server. It handles the low-level protocol communication for that specific session.

- MCP Server: This is a lightweight wrapper around an external tool or data source (e.g., GitHub, Slack, a local database). Its job is to expose the capabilities of that tool through the standardized MCP interface, making it discoverable and usable by any client.

Core Primitives: The Building Blocks of Communication

MCP defines a set of fundamental building blocks, called primitives, that represent the types of capabilities and information that can be exchanged. These primitives enable sophisticated, bidirectional communication between the AI and its tools.

| Primitive Name | Exposed By | Core Function | Use Case Example |

|---|---|---|---|

| Tools | Server | Enables the AI to perform actions with side effects in the external world. | Creating a new ticket in a project management system like Jira. |

| Resources | Server | Provides passive, structured data as context for the AI, similar to a RAG workflow. | Reading the content of a local file or a record from a database. |

| Prompts | Server | Offers reusable templates to guide user or AI interactions in a structured way. | A blogging platform server offers a “Generate a blog post outline” template. |

| Sampling | Client | Allows a server to request an LLM completion from the host’s AI model. | A code analysis server fetches code and asks the host’s LLM to find potential bugs. |

| Elicitation | Client | Enables a server to request additional input or confirmation from the user. | A payment tool asks the user to confirm a transaction amount before processing. |

Communication and Transport Mechanisms

While the protocol itself is transport-agnostic, the specification defines two standard transport mechanisms to cover the most common use cases for this versatile AI integration standard.

| Characteristic | stdio Transport | Streamable HTTP Transport |

|---|---|---|

| Primary Use Case | Local tool integrations (e.g., IDE assistants, CLI tools). | Remote and web-based services (e.g., SaaS APIs, centralized tools). |

| Communication Channel | Subprocess standard input (stdin) and standard output (stdout). | HTTP POST/GET requests with optional Server-Sent Events (SSE). |

| Performance | Very low latency and minimal overhead. | Higher latency, dependent on the network. |

| Security Profile | Contained within the local machine; relies on OS permissions. | Exposed to the network; requires TLS, authentication, and web security best practices. |

Security and Authorization by Design

Connecting powerful AI to sensitive systems requires a robust security model. MCP was designed with a trust-centric approach founded on four key pillars:

- User Consent and Control: The user must explicitly understand and authorize all data access and tool execution. The Host application is responsible for providing clear consent interfaces.

- Data Privacy: User data cannot be transmitted to a server without permission, and all data should be protected with proper access controls and encryption.

- Tool Safety: Invoking any tool that can execute code or change state requires explicit user approval.

- LLM Sampling Controls: The user must approve any request from a server to use the host’s LLM (sampling) to prevent unauthorized or costly operations.

For remote connections, the protocol is standardizing on OAuth 2.1 as the framework for secure, delegated authorization. This allows users to grant limited access to their resources on a third-party service without ever sharing their credentials with the AI agent.

MCP vs. RAG vs. Custom APIs

It’s crucial to understand that MCP is not a replacement for other AI paradigms like Retrieval-Augmented Generation (RAG) but a complementary part of a modern AI context stack. The most powerful AI systems use a hybrid approach.

- RAG is about knowing. It retrieves relevant information from a static knowledge base to give the LLM context.

- MCP is about doing and querying. It enables the LLM to take action or query for live, real-time data from dynamic systems.

The following table clarifies when to use each approach:

| Dimension | Model Context Protocol (MCP) | Retrieval-Augmented Generation (RAG) | Custom API Integration |

|---|---|---|---|

| Primary Function | Action execution and real-time, structured data query. | Knowledge retrieval from a static corpus. | Bespoke connection to a single service. |

| Integration Scalability | High: Build a server once, use it with many clients. | High: Ingest data once, query from many applications. | Low: Requires a new integration for each client-service pair. |

| When to Use | For agentic workflows requiring actions, real-time data, or interaction with multiple heterogeneous tools. | For building Q&A systems over a large, relatively static body of documents or knowledge. | For simple, one-off integrations where a full protocol is overkill and scalability is not a concern. |

Conclusion: The Future of Agentic AI

The Model Context Protocol (MCP) has successfully established itself as the foundational open standard for agent-to-tool communication. By providing a universal, model-agnostic, and secure interface, it solves the critical integration challenges that previously hindered the development of truly capable AI agents.

Its strength lies in its composable architecture, robust security model, and the vibrant open-source ecosystem growing around it. For developers and architects building the next generation of AI, adopting MCP is not just a best practice—it is the standard for creating scalable, interoperable, and trustworthy agentic systems.