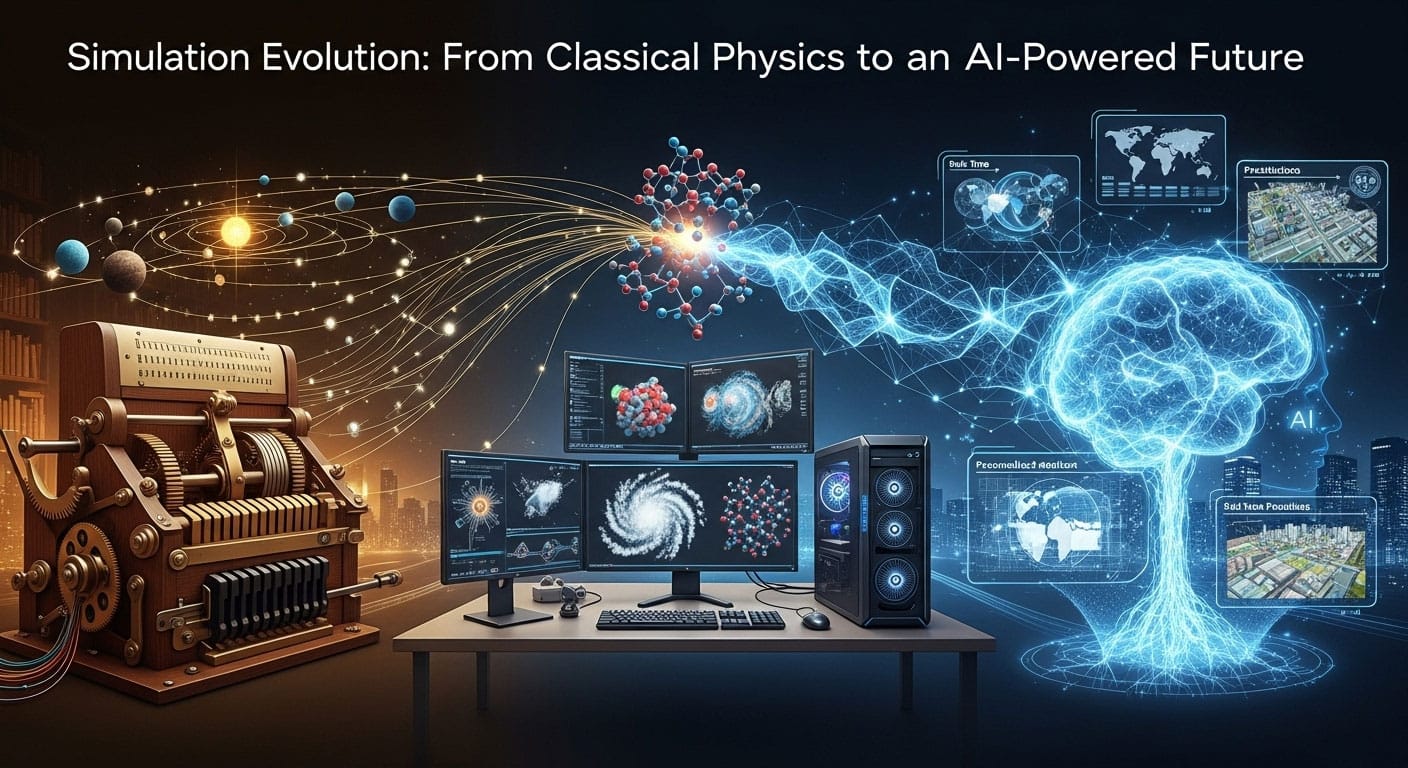

The simulation of physical systems stands as one of modern science’s greatest achievements, a field that has transformed from abstract mathematical ideas into a primary engine of discovery. This profound evolution of simulation has been a journey of increasing complexity and power, charting a course from the clockwork universe of classical mechanics to the probabilistic frontiers of quantum computing and artificial intelligence. This article explores the key milestones in this journey, revealing a symbiotic relationship between physical theory, computational methods, and hardware innovation.

The Classical Foundations: Describing a Clockwork Universe

The ability to simulate the world first required a mathematical language to describe it. The 17th and 18th centuries provided this language through differential equations, establishing a deterministic view where the future could be predicted if the present state and governing laws were known.

Newtonian Mechanics: The Dawn of Prediction

The story begins with Sir Isaac Newton. His Laws of Motion, particularly the second law (F=ma), provided a direct, predictive relationship between force, mass, and acceleration. By developing calculus, Newton gave science the tools to solve these equations, allowing for the calculation of trajectories and laying the conceptual groundwork for simulation: the universe as a grand, solvable system.

Lagrangian & Hamiltonian Mechanics: A More Elegant Framework

While powerful, Newton’s force-based approach could be complex for systems with constraints (like a pendulum). This led to more abstract, energy-based reformulations:

- Lagrangian Mechanics: Joseph-Louis Lagrange shifted the focus from vector forces to scalar energies (kinetic and potential). This approach simplifies complex problems by using generalized coordinates and handling constraints implicitly.

- Hamiltonian Mechanics: William Rowan Hamilton introduced an even more abstract view using “phase space,” a high-dimensional space defined by positions and momenta. This formulation not only revealed deeper symmetries in physics but also provided the essential mathematical bridge to quantum mechanics.

Fluid Dynamics: Modeling Continuous Matter

Parallel to describing discrete particles, scientists tackled the motion of fluids. This began with idealized principles like Bernoulli’s equation (relating pressure and velocity) and progressed to the Euler equations for frictionless flow. The ultimate classical description came with the Navier-Stokes equations, which incorporated viscosity (friction). These incredibly accurate but non-linear equations are notoriously difficult to solve, especially for chaotic phenomena like turbulence, making them a primary driver for the development of computational simulation.

The Computational Leap: Making Continuous Physics Discrete

The beautiful, continuous equations of classical physics were mostly unsolvable for real-world problems. The arrival of digital computers required a new approach: translating continuous physical laws into discrete, solvable algorithms. This process, known as discretization, is the foundation of modern computational physics.

The Rise of Numerical Methods

Discretization involves breaking a problem’s continuous space and time into a finite grid or mesh. The derivatives in the physical equations are then replaced with algebraic approximations that relate the values at these discrete points. This converts an intractable differential equation into a large system of solvable algebraic equations.

Grid-Based vs. Particle-Based Approaches

Several methods emerged to perform this discretization, each with unique strengths:

- Finite Difference Method (FDM): An intuitive method that approximates derivatives on a structured grid. It’s efficient for simple geometries.

- Finite Element Method (FEM): A highly versatile method that divides a domain into a mesh of simple shapes (elements). It excels at handling complex, irregular geometries like engine blocks or aircraft wings.

- Particle-Based Methods (e.g., SPH): Mesh-free methods where the material is represented by a collection of moving particles. These are ideal for simulating problems with large deformations, splashes, or fragmentation, like a dam break or planetary impact.

The Quantum Revolution: Simulating a Probabilistic Reality

At the atomic scale, classical mechanics fails. The quantum world is governed by probability and superposition. Simulating this reality on a classical computer is incredibly difficult because the resources required grow exponentially with the number of particles—a problem Richard Feynman called the “exponential explosion.”

From Schrödinger’s Equation to Feynman’s Path Integrals

Two key formulations describe quantum evolution:

- The Schrödinger Equation: The fundamental law of quantum mechanics, this equation governs how a system’s wave function (which encodes all its probable states) evolves over time.

- Feynman’s Path Integral: An alternative view stating that to find the probability of a particle moving between two points, one must sum up every possible path it could take. This elegant formulation provides the basis for powerful computational techniques like Path Integral Monte Carlo.

Quantum Simulation: Using Quantum to Model Quantum

Feynman realized that the best way to overcome the exponential challenge was to use a controllable quantum system to simulate another quantum system. This idea, now known as quantum simulation, circumvents the classical bottleneck by naturally using the exponentially large state space of quantum mechanics itself. It promises to revolutionize materials science and drug discovery.

Pinnacles of Modern Computation: Grand-Scale Simulation Projects

Modern supercomputers enable “grand-challenge” simulations that act as virtual laboratories to explore the universe, our planet, and the building blocks of matter.

Simulating the Cosmos

Cosmological simulations test our understanding of the universe’s evolution. A succession of projects has added increasing physical realism.

| Project | Primary Focus | Key Physics Included | Key Contribution |

|---|---|---|---|

| Bolshoi (2010) | Dark Matter Halos | Gravity (Dark Matter Only) | Provided a high-precision benchmark for how cosmic structure forms. |

| IllustrisTNG (2017) | Galaxy Formation | Hydrodynamics, Star Formation, Black Hole Feedback, Magnetic Fields | Successfully reproduced realistic galaxy populations and linked them to black hole activity. |

| FLAMINGO (2023) | Large-Scale Structure | All of the above, plus Massive Neutrinos and ML-based calibration | Largest hydro-sim to date; first to use machine learning to calibrate against real observations. |

Modeling Planet Earth and Beyond

- Climate Modeling: Projects like those run on Japan’s Earth Simulator were pioneers in creating high-resolution, coupled models of the atmosphere and oceans. Modern Global Climate Models (GCMs) form the basis of IPCC reports, helping us understand and project climate change.

- Engineering & Biology: The Frontier exascale supercomputer has enabled record-breaking simulations of molecular dynamics for materials science and massive fluid dynamics calculations for hypersonic vehicle design. The Blue Brain Project pursues a different frontier: creating a detailed digital reconstruction of a mammalian brain to understand its circuitry and function.

The New Paradigm: How AI Is Accelerating the Simulation Evolution

The traditional approach of using brute-force computation to solve equations is being transformed by Artificial Intelligence. AI is not just making simulations faster; it’s fundamentally changing how they are built and used. This fusion marks the latest leap forward in the simulation evolution.

AI Surrogate Models: Simulating at the Speed of Inference

A “surrogate model” is an AI trained on data from a high-fidelity simulator. Once trained, it can predict the simulation’s output millions of times faster than the original. This allows for real-time analysis and large-scale design optimization that was previously impossible. Physics-Informed Neural Networks (PINNs) take this further by embedding the physical laws directly into the AI’s training process, creating more accurate and data-efficient models.

Machine Learning in the Loop: Calibration and Analysis

Machine learning is now being used directly inside the simulation workflow. As seen in the FLAMINGO project, ML can efficiently calibrate the complex “subgrid” parameters that represent small-scale physics, ensuring the simulation better matches reality. ML algorithms are also essential for finding patterns in the petabytes of data modern simulations produce.

LLMs as “AI Co-Scientists”: A New Research Interface

Large Language Models (LLMs) are emerging as powerful collaborators. They can accelerate research by generating simulation code, debugging, and writing documentation. More profoundly, specialized LLMs are being developed to query massive scientific datasets, summarize findings, and even help generate new hypotheses, acting as “AI co-scientists” that augment human creativity and intuition.

Conclusion: The Future of Discovery

The history of physical simulation is a story of a powerful, symbiotic evolution driven by the interplay of theory, computation, and hardware. Theoretical questions demanded computational solutions, which in turn required more powerful hardware. The results from these simulations then revealed new complexities, driving theory forward once again. Simulation has matured from a validation tool into a primary pillar of science, standing alongside theory and experiment. The fusion with AI is accelerating this trend, promising a future where human researchers, assisted by Artificial Intelligence, can tackle scientific challenges at an unprecedented pace and scale.